Benchmarking

Benchmarks should be the first-class citizens. We believe that performance is crucial for any system, and we strive to provide the best possible performance for our users. Please check, why we believe that the transparent benchmarking is so important.

We've also built the benchmarking platform where anyone can upload the benchmarks and compare the results with others. This is the another open-source project available here.

Iggy comes with a built-in benchmarking tool iggy-bench that allows you to measure the performance of the server. It is written in Rust and uses the tokio runtime for asynchronous I/O operations mimicking the example client applications. The tool is designed to be as close to real-world scenarios as possible, so you can use it to estimate the performance of the server in your environment. It is part of the core repository and can be found in the bench directory.

To benchmark the project, first build the project in release mode:

cargo build --release

Then, run the benchmarking app with the desired options:

-

Sending (writing) benchmark

cargo r --bin iggy-bench -r -- -v pinned-producer tcp -

Polling (reading) benchmark

cargo r --bin iggy-bench -r -- -v pinned-consumer tcp -

Parallel sending and polling benchmark

cargo r --bin iggy-bench -r -- -v pinned-producer-and-consumer tcp -

Balanced sending to multiple partitions benchmark

cargo r --bin iggy-bench -r -- -v balanced-producer tcp -

Consumer group polling benchmark:

cargo r --bin iggy-bench -r -- -v balanced-consumer-group tcp -

Parallel balanced sending and polling from consumer group benchmark:

cargo r --bin iggy-bench -r -- -v balanced-producer-and-consumer-group tcp -

End to end producing and consuming benchmark (single task produces and consumes messages in sequence):

cargo r --bin iggy-bench -r -- -v end-to-end-producing-consumer tcp

These benchmarks would start the server with the default configuration, create a stream, topic and partition, and then send or poll the messages. The default configuration is optimized for the best performance, so you might want to tweak it for your needs. If you need more options, please refer to iggy-bench subcommands help and examples.

For example, to run the benchmark for the already started server, provide the additional argument --server-address 0.0.0.0:8090.

Depending on the hardware, transport protocol (quic, tcp or http) and payload size (messages-per-batch * message-size) you might expect over 5000 MB/s (e.g. 5M of 1 KB msg/sec) throughput for writes and reads.

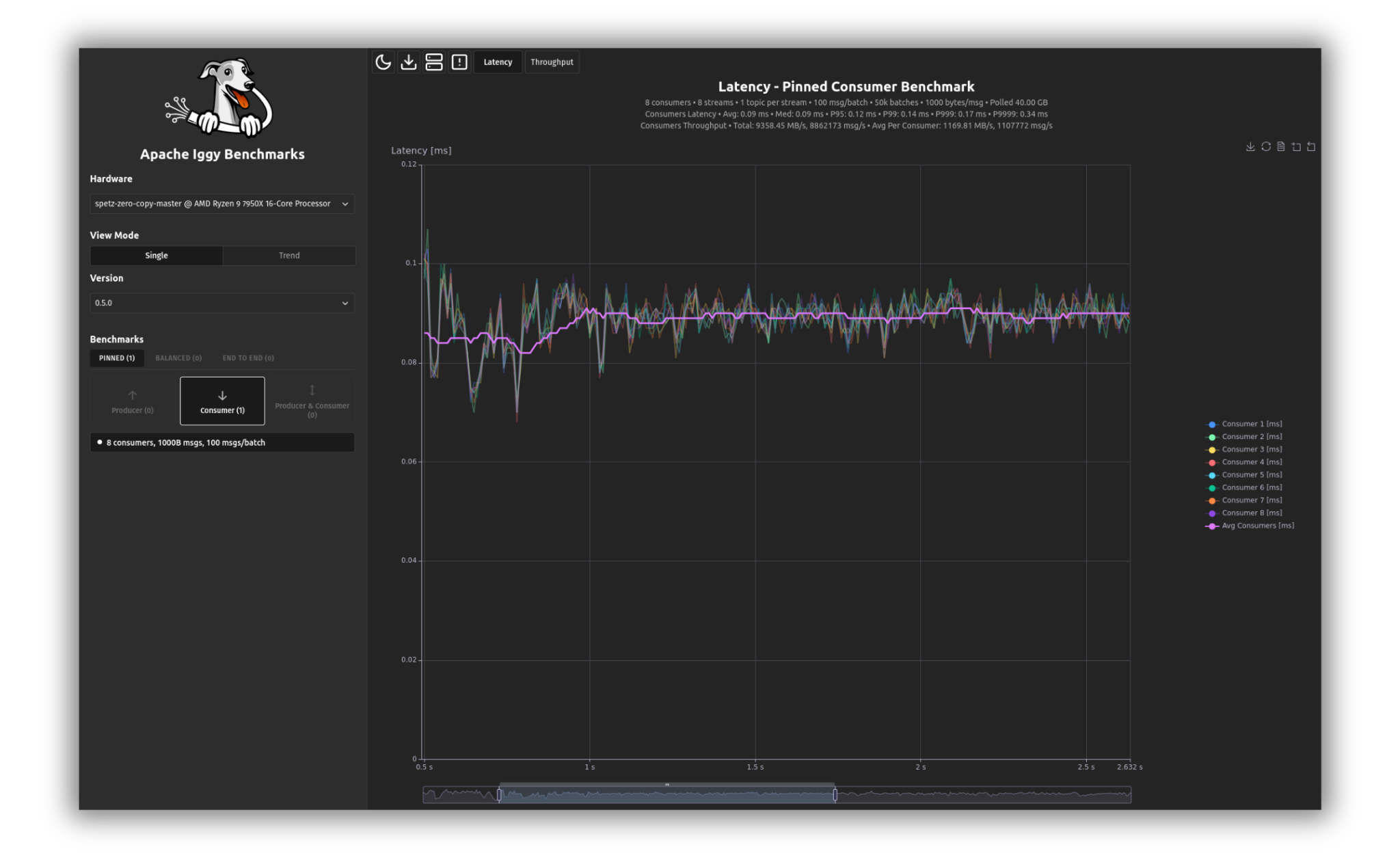

Iggy is already capable of processing millions of messages per second at the microseconds range for p99+ latency, and with the upcoming optimizations related to the io_uring support along with the shared-nothing design, it will only get better.